GFLOPS by itself means 1000x1000 DP LINPACK GFLOPS.

SI/FP(16) 16.4/24.8 means SPECint95/SPECfp95 results with 16 CPUs are 16.4 and 24.8, respectively.

![]() Alex Informatics

AVX3: 32

PowerPC

604 scalar processors

Alex Informatics

AVX3: 32

PowerPC

604 scalar processors

(~4 GFLOPS.)

This seems to be a bunch of PowerPC machines hooked together in a distributed-memory setup. Boring.

An AVX3 at: City University of Hong Kong.

Connection

Machines Services CM-5: 1024 SPARC

vector-accelerated processors

Connection

Machines Services CM-5: 1024 SPARC

vector-accelerated processors

(~1,000 GFLOPS/16,000 CPUs theoretical, 0.128 GFLOPS Peak/CPU.)

[No longer in production.]Thinking Machines Corporation no longer makes hardware, their hardware arm was acquired by the Gores Technology Group and became CMS.

CM-5s at: The Army High Performance Computing Research Center,

and Ames Research Center, Moffett Field, CA,

and The University of Illinois at Urbana-Champaign's National Center for Supercomputing Applications (NCSA) (retired 31 January 1997),

and a CM-5 Guide.

Digital Equipment Corporation

AlphaServer

8400 5/625: 14 DEC Alpha

21164 612.8 MHz scalar processors

Digital Equipment Corporation

AlphaServer

8400 5/625: 14 DEC Alpha

21164 612.8 MHz scalar processors

(7.230 GFLOPS, 3.608 GFLOPS/8 CPUs, 0.764 GFLOPS/CPU. SI/FP(1) 18.4/20.8, SI/FP(1) 18.4/20.8, SI/FP(8) ??/56.7)

These systems can run DIGITAL UNIX, OpenVMS, or, via a DEC installed "conversion option," NT 4.0.

The 5/440 models (14 CPUs, 437 MHz) can be clustered to form a distributed-memory 64 CPU system. However, the more CPU processor modules you put in (two Alphas per module), the less RAM you can put in, so a 14 CPU system can have only 4GB of RAM, and a 2 CPU system can have 28GB of RAM. Running NT, however, the system only scales to 14 CPUs.

AlphaServer 8400s at: Lycos.

Fujitsu Parallel Server AP3000 U300: 1024

UltraSPARC

300 MHz scalar processors

Fujitsu Parallel Server AP3000 U300: 1024

UltraSPARC

300 MHz scalar processors

(SI/FP(1) 12.1/15.5.)

["The entry system of the AP3000 series starts at $138,000."]

Eight CPUs (nodes) go in a cabinet, and up to one hundred and twenty eight cabinets can be joined to make a single computer. It seems that four 400 MB/s network ("AP-Net") ports are attached to each node, and by interconnecting them this is how multi-node computers are made.

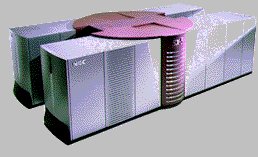

Fujitsu

VPP700E:

256 Fujitsu CMOS processing elements

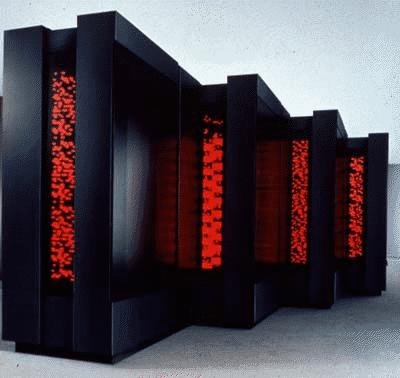

Fujitsu

VPP700E:

256 Fujitsu CMOS processing elements

(614.4 GFLOPS Peak, 2.4 GFLOPS Peak/CPU.)

[Introduced March 4, 1996. 20 VX/VPP300/VPP700 computers in Europe as of July 1997.]

The processing elements (PEs) each contain a RISC scalar CPU and a vector CPU. The VXE Series hold 1-4 PEs, and the VPP300E Series 1-16 PEs. The VPP700E comes in dark red, dark blue or charcoal black, dark red being shown above.

A VPP700 at: Leibniz-Rechenzentrum der Bayerischen Akademie der Wissenschaften (LRZ).

Hewlett Packard

9000

V2500 Enterprise Server: 128

PA-8500 440 MHz processors

Hewlett Packard

9000

V2500 Enterprise Server: 128

PA-8500 440 MHz processors

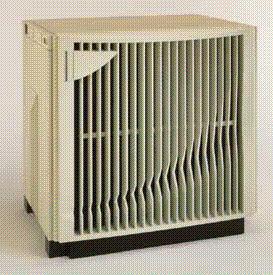

Hewlett Packard

9000 V2250 Exemplar: 16

PA-8200

240 MHz processors

Hewlett Packard

9000 V2250 Exemplar: 16

PA-8200

240 MHz processors

(5.935 GFLOPS/16 CPUs, 15.360 GFLOPS Peak/16 CPUs, 0.743 GFLOPS/1 CPU, 0.960 GFLOPS Peak/1 CPU. SI/FP(16) 16.4/24.8.)

["Starter system" priced at $196,200. 16GB RAM max.]

This is an interesting little cube, about 1x1x1 meter. It's hard to tell what is the front and what is the side. If you call the front where the key is, then the front is flat and has a CDROM, a DAT, a status panel display, and a key used to turn the system on and off. The picture above would then be of the front and the right-hand side. The left-hand side would then consist mostly of four large cooling fans. It appears the four fans unscrew separately, giving access to the CPUs, but then again, when the plastic covering the "back" of the system is taken off, it looks as if you could also gain access inside the system from there. The side I show above, with the grills, has a black dust filter behind it which seems to get covered in a few months even in a room with no carpet. This makes me suspect that the fans pull air into the system from the right-hand side, then across the CPUs in the middle, and then out the left-hand side.

Hewlett Packard

Convex Exemplar SPP1200/XA: 128

PA-7200

120 MHz scalar processors

Hewlett Packard

Convex Exemplar SPP1200/XA: 128

PA-7200

120 MHz scalar processors

(30.7 GFLOPS Peak, 0.656 GFLOPS/8 CPUs, 0.240 GFLOPS/CPU.)

[No longer in production.]8 CPUs are connected together in a shared-memory node, and these nodes are connected together with a crossbar to form the 128 CPU distributed-memory system. I'm not sure how many CPUs they manged to make work together in the shared-memory mode, but I recently heard that they managed to break 16.

Exemplars at: The University of Illinois at Urbana-Champaign's National Center for Supercomputing Applications (NCSA),

and The Naval Reserach Laboratory (NRL),

and Fluent Incorporated,

and The Scripps Research Institute.

Hitachi SR2201: 2048

Hitachi HARP-1E 150 MHz pseudo-vector RISC processors

Hitachi SR2201: 2048

Hitachi HARP-1E 150 MHz pseudo-vector RISC processors

(600 GFLOPS Peak.)

The "High-end model" has thirty-two to two-thousand and forty-eight CPUs, and the "Compact model" has eight to sixty-four CPUs.

SR 2201s at: The High Performance Computing Center in Stuttgart.

and Cambridge University.

Hitachi S-3800/480: 4 500

MHz processors

Hitachi S-3800/480: 4 500

MHz processors

(32 GFLOPS Peak, 20.640 GFLOPS.)

S-3800s at: The University of Tokyo.

and The Institute for Materials Research, Tohoku University, Japan,

and The Japanese Meteorological Center.

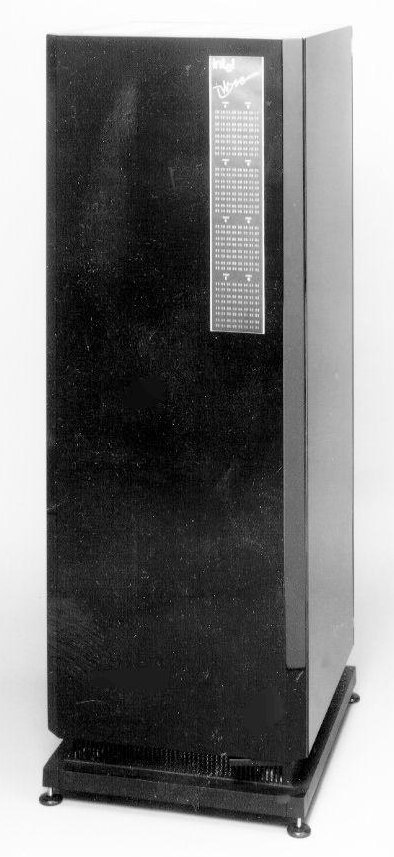

Intel

iWarp: > 1500 custom scalar processors

Intel

iWarp: > 1500 custom scalar processors

(20.48 GFLOPS peak/1024 CPUs, 20 MIPS & 0.020 GFLOPS Peak/CPU.)

[No longer in production.]

Four CPUs go into a single board called a cell, which also contains memory and four 40 MBytes/sec full duplex I/O ports, and these cells are joined to make the computer. The picture above shows a 256 CPU rackmount. Be sure to check out the CMU link, as that is where this computer had its origin.

iWarps at: Carnegie Mellon University,

and NASA.

Intel Paragon:

9216 Pentium

Pro 200 MHz scalar processors

Intel Paragon:

9216 Pentium

Pro 200 MHz scalar processors

(1,348 Rmax [235Kx235K] GFLOPS/9152 CPUs.)

[No longer in production.]

For a long time, the ASCI Red variant of this machine was the fastest LINPACK NxN benchmarked computer. Finally the IBM and SGI ASCI machines, which continued to grow in size while the Paragon stagnated, both managed to outpace it.Paragons at: CRPC Caltech,

and The Advanced Computing Laboratory of The Los Alamos National Laboratory (LANL),

and The Center for Computational Sciences at Oak Ridge National Laboratory (CCS-ORNL),

and The University of Illinois at Urbana-Champaign Pablo Research Group,

and ASCI Red.

International

Business Machines RS/6000

POWERparallel SP2: 512 Power2

or 64 PowerPC

604e scalar processors

International

Business Machines RS/6000

POWERparallel SP2: 512 Power2

or 64 PowerPC

604e scalar processors

(Thin Node 160MHz: 0.528 GFLOPS Peak, 0.3119 GFLOPS, SI/FP(1) 8.62/26.6.)

(PowerPC 604e 332MHz: 0.1157 GFLOPS, SI/FP(1) 14.4/12.6.)Configurations greater than one hundred and twenty eight processors (nodes) are considered "special requests." Each cabinet (system frame) holds sixteen nodes, communicating through a SP Switch at 110MB/second peak, full duplex, to make a 128p distributed-memory setup with 16p shared-memory cabinets. The Power2 nodes run at 120, 135 or 160 MHz, and the PowerPC nodes run at 200 or 332MHz.

SP2s at: The Maui High Performance Computing Center, Hawaii,

and The Cornell Theory Center,

and The University of Hong Kong Computer Centre,

and The University of Southern California,

and ACC CYFRONET - Kraków, Poland.

and ASCI Blue Pacific.

Kendall Square Research KSR-1: 1088

scalar processors

Kendall Square Research KSR-1: 1088

scalar processors

(43.520 GFLOPS Peak, 0.513 GFLOPS/32 CPUs.)

[Company no longer exists.]

KSRs at: The Center For Applied Parallel Processing, University of Colorado (CAPP).

and The North Carolina Supercomputer Center (NCSC),

and Manchester Computing, University of Manchester (MCC).,

and some old information from NASA,

and what happened to KSR.

MasPar MP-1/MP-2: 16,384

custom CMOS scalar processors

MasPar MP-1/MP-2: 16,384

custom CMOS scalar processors

(1.2 GFLOPS/MP1, 6.3 GFLOPS, 1.6 Rmax [11.264Kx11.264K] GFLOPS/MP2.)

[Introduced January 1990. Over 200 shipped. MP1 $75,000 - $890,000; MP2 $160,000 - $1.6 million. Company no longer makes hardware.]

MP-1/MP-2s at: Laboratoire d'Informatique Fondamentale de Lille,

and The University of Tennessee,

and The Scalable Computing Laboratory of Ames Laboratory,

and someone's MS thesis on an MP-1 at The Polaroid Machine Vision Laboratory at Worchester Polytechnic Institute,

and a report from Purdue.

Meiko CS-2:

>800 SPARC 90 MHz scalar processors, or SPARCs with Fijitsu vector

processors

Meiko CS-2:

>800 SPARC 90 MHz scalar processors, or SPARCs with Fijitsu vector

processors

(0.180 GFLOPS/scalar CPU, 0.200 GFLOPS/vector CPU.)

The scalar optimized CS-2 uses two SPARC CPUs as a processing element (PE), while the vector version uses a SPARC CPU and two Fujitsu vector units as a PE.

A single processor board, part of a "module", contains up to 4 PEs. These modules interconnect in rackmounts in groups of four, and from the pictures, it seems that 24 module racks then interconnect via a central switch, which is how larger systems are made.

Meikos at: British Shoe Corporation,

and Bass Taverns.

and Lawrence Livermore National Laboratory (LLNL) [Decomissioned August 1998].

nCUBE

nCUBE2

and MediaCUBE:

512 nCUBE vector

processors

nCUBE

nCUBE2

and MediaCUBE:

512 nCUBE vector

processors

(0.204 GFLOPS, 1.205 GFLOPS Peak, 0.00235 GFLOPS/CPU.)

[No longer in production.]nCUBE2s at: The Alabama Supercomputer Authority (ASA),

and The MIT Earth Resources Laboratory,

and The Scalable Computing Laboratory of Ames Laboratory.

NEC SX-5:

512 3.3V CMOS 250 MHz vector processors

NEC SX-5:

512 3.3V CMOS 250 MHz vector processors

(4096 vector GFLOPS Peak/128 CPUs, 8 vector GFLOPS Peak/CPU, 0.500 scalar GFLOPS Peak/CPU.)

[Introduced June 1998. Shipping December 1998.]This computer, like the SX-4, has a small and a large chassis model. The 4 TFLOPS chassis is made out of combining several large ("A") chassis together, each of which hold 16 CPUs (=128 GFLOPS Peak) and 128 GB of RAM. This RAM is shared across the 16 CPUs, which each have 16 vector pipes. The nodes are then connected by what NEC calls an "IXS Internode Crossbar Switch,". Of course, the memory on each different node is then distributed, not shared. A half-sized chassis, called a "B chassis," holds 8 CPUs, giving the user 64 GFLOPS Peak and 64 GB of RAM.

NEC SX-4/32:

32 custom 155 MHz vector processors

NEC SX-4/32:

32 custom 155 MHz vector processors

(64 GFLOPS Peak, 66.530 Rmax [15Kx15K] GFLOPS, 31.060 GFLOPS/32 CPUs, 1.944 GFLOPS/1 CPU.)

The SX-4A (Large Memory Models) have 1-16 CPUs and 32GB of RAM. The Compact Models have 1-4 CPUs and 4GB of RAM. The SX-4 Multi-node Model interconnects SX-4/32 single nodes (pictured above) via an inter-node crossbar switch (model 512M16) or a HiPPI switch (model 512H16), for up to 1,000 GFLOPS Peak/512 CPUs.

SX-4s at: Centro Svizzero di Calcolo Scientifico (CSCS),

and The High Performance Computing Center in Stuttgart,

and Deutsches Zentrum für Luft- und Raumfahrt (DLR) WT-DV-IG Göttingen,

Siemens-Pyramid

RM600

E Enterprise Systems: 8 or 24

MIPS

R10000

250 MHz or R12000 300 MHz scalar processors

Siemens-Pyramid

RM600

E Enterprise Systems: 8 or 24

MIPS

R10000

250 MHz or R12000 300 MHz scalar processors

(SI/FP(1) 14.7/??.)

[Eight CPU RM600 E20 model "begins at $75,000." Twenty-four CPU E60 model "begins at $225,000."]

The E60 consists of "cells" containing up to six processors (nodes), and these cells are stacked two high and then side-by-side for larger configurations.

Siemens-Pyramid

Reliant

RM 1000: 768 MIPS

R4400 250 MHz

scalar processors.

Siemens-Pyramid

Reliant

RM 1000: 768 MIPS

R4400 250 MHz

scalar processors.

(SI/FP(1) 5.05/??.)

[No longer in production.]

"Cells" contain up to six processors (nodes), and these cells are stacked two high and then side-by-side for larger configurations.

RM 1000s at: Solitaire Interglobal, Ltd..

Silicon Graphics Challenge XL: 1-36 MIPS R4400

/ R8000

/ R10000

scalar processors

Silicon Graphics Challenge XL: 1-36 MIPS R4400

/ R8000

/ R10000

scalar processors

(2.700 GFLOPS/36 R4400s; 3.240 GFLOPS/16 R8000s, 6.48 GFLOPS Peak/18 R8000s; 13.5 GFLOPS Peak/36 R10000s. SI/FP(2 R10000s) 8.75/13.8.)

[Production ended June 1998.]

The naming convention for this machine is a disaster. R4400-based machines were called "Challenges," and R8000-based machines were called "Power Challenges." Then the R10000 came out, and people started calling them "Power Challenges" as well, and the label on the front of the machine said either "POWER CHALLENGE 10000" or "CHALLENGE 10000." The Challenge M and Challenge S are completely different single CPU machines, having no parts in common with the XL, unlike the L and DM--deskside machines which do share many parts with the XL.

A rare configuration, called a POWER CHALLENGEarray, is made by taking up to eighteen R8000 or thirty-six R10000 CPUs in a single cabinet (a POWERnode, shown above), and combining up to eight such nodes via HIPPI to make a distributed-memory array, ~109 GFLOPS Peak R10000, 26.7 GFLOPS/128 R8000 90MHz CPUs [N ~ 53,000].

Challenge XLs at: Florida State University.

and Virginia Commonwealth University,

and University of Utah,

and Masarky University, Czech Republic.

Silicon Graphics

Origin 2000:

MIPS

R12000

400 MHz scalar processors

Silicon Graphics

Origin 2000:

MIPS

R12000

400 MHz scalar processors

(R10K CPUs: 1.608 Rmax TFLOPS/5040 CPUs; 13.826 Rmax GFLOPS/32 CPUs [N~36,000]. SI/FP(1) 14.7/24.5.)

[Introduced October 1996.]

Another naming disaster: smaller systems used to be called SGI Origins, and larger systems Cray Origins. That practice has now disappeared. Eight CPUs go in a module, two modules go in a rack, and up to sixteen racks (and two metarouters) can be joined to make a single shared-memory computer. At the ASCI project, linked below, fourty-eight 128p systems are combined with HIPPI switches to make a 6144p distributed-memory system using message-passing between the machines.

Origin 2000s at: The Advanced Computing Laboratory of The Los Alamos National Laboratory (LANL),

and The Institute for Chemical Research of Kyoto University, Japan,

and ASCI Blue Mountain.

Cray

C90:

16 vector processors

Cray

C90:

16 vector processors

(10.780 GFLOPS, 16 GFLOPS Peak.)

[Announced November 1991. No longer in production, replaced by the Cray T90.]

C90s at: Pittsburgh Supercomputing Center (PSC),

and San Diego Supercomputing Center (SDSC),

and The National Aeronautics and Space Administration (NASA),

and National Energy Research Scientific Computing Center (NERSC).

and The National Supercomputer Centre in Sweden.

Cray

Cray J90 (Classic)/J90se: 32 vector processors

Cray

Cray J90 (Classic)/J90se: 32 vector processors

(J916: 2.471 GFLOPS/16 of 32 CPUs; J932se: 6.4 GFLOPS Peak/32 CPUs.)

["Systems start at $500,000." Over 400 sold. Replaced by the Cray SV1.]

The J90se (Scalar Enhanced) scalar CPU chips run twice as fast as those on the J90 Classic (200 versus 100 MIPS), and the J90se has GigaRing I/O channels instead of VME I/O channels, along with being able to handle more RAM. J90se CPUs can be put into J90 Classics. These systems are binary compatible with the Y-MP line, and are being replaced by the SV1.

J90s at: The Pittsburgh Supercomputing Center (PSC),

and The Texas A&M University Supercomputing Facility,

and Núcleo de Atendimento em Computação de Alto Desempenho,

and The CLRC/RAL Atlas Cray Supercomputing Service.

Cray

MTA:

4 vector processors

Cray

MTA:

4 vector processors

(1 GFLOP/CPU.)

[First installation December 1997. $73,500 from SDSC for installing it, and another $2M or so since then for upgrades, etc.]

Early two processor benchmarks show the machine to be 10-20% slower than a Cray T90, but more recent benchmarks (NAS, etc.) give much, much stronger results. I'll look through them sometime soon and post a summary.

I can find only one installed system, and it has just been upgraded to 4 CPUs:The MTA at: The San Diego Supercomputing Center (SDSC).

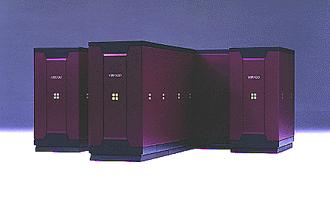

Cray

SV1:

6 300 MHz vector Multi-Streaming Processors, 8 "standard" vector

processors

Cray

SV1:

6 300 MHz vector Multi-Streaming Processors, 8 "standard" vector

processors

(38.4 GFLOPS Peak/node; 4.8 GFLOPS Peak/MSP; 1.2 GFLOPS Peak/standard processor; 1228.8 GFLOPS Peak/32 node system; 1 TB RAM.)

[Introduced 16 June 1998. "[S]lated for availability starting in August 1998 from U.S. list prices of $500,000..."]

The photo above just doesn't do this box justice--you really have to see the shade of blue used in person to fully appreciate it. The yellow lights in the black, V-shaped protrusion are also particularly evil looking! The Multi-Streaming Processors (MSPs) have eight vector pipes, and these pipes can be run together as a single unit, or can be split to run as four two-pipe units. Each two-pipe unit runs at 1.2 GFLOPS Peak, so the four pairs together run at 4.8 GFLOPS Peak. If you have a small number of users that run big jobs, then you'd configure the MSPs as a single eight-pipe unit, apparently, and vice-versa otherwise. A "node" contains six MSPs and eight "standard" processors, and 32 of these nodes can be connected together, creating a 1.2+ TFLOPS Peak system. The individual SMP nodes are connected together with what Cray calls "superclustering" to form the 1.2+ TFLOPS Peak system, with the information passing back and forth between the nodes using message-passing programming, so in this regard this system is like the SGI POWER CHALLENGEarray: it's a group of SMP racks connected together with parallelization of programs over nodes done by message-passing routines. Cray seems to be making a big deal about how they are the first to use "vector cache memory" with the SV1, but I haven't seen a good explanation of it yet so I won't go into it here. It's 256K, 4-way associative, with 8 bytes per cache line. The existing Cray J90/J90se product can be upgraded to these new CPUs, and the SV1 maintains binary compatibility with the J90 and Y-MP.

Cray

T3E-1200E:

2048 DEC

Alpha 21164 600MHz vector processors

Cray

T3E-1200E:

2048 DEC

Alpha 21164 600MHz vector processors

(2400 GFLOPS Peak.)

[Six CPUs "starting at $630,000."]

Two hundred and fifty six CPUs go in a liquid-cooled cabinet, and up to eight cabinets be joined to make a distributed-memory system. The E at the end of the 1200 is an upgrade that takes the "measured interprocessor bandwidth from 330 to 420 megabytes per second" along with a few other improvements. An air-cooled model is also available, it holds up to 128 CPUs.

T3s at: The San Diego Supercomputer Center (SDSC),

and The High Performance Computing Center in Stuttgart,

and The Edinburgh Parallel Computing Centre at The University of Edinburgh, UK (EPCC),

and The Pittsburgh Supercomputing Center (PSC),

Cray

T90

4 (T94)

or 32 (T932) vector processors

Cray

T90

4 (T94)

or 32 (T932) vector processors

(1.8 GFLOPS/CPU, 57.6 GFLOPS Peak.)

[Introduced 22 February 1995. T94 >= $2.5 million ; T932 <= $41 million.]

The T94 uses a completely different chassis than the T932 chassis shown above. The T916 is a T932 chassis with 16 CPUs on one side of the machine, with the other side being empty. T916s can not be upgraded to T932s though--if you ever see one with the skins off, you'll know why immediately. These systems are binary compatible with the Cray C90.T90s at: Leibniz-Rechenzentrum der Bayerischen Akademie der Wissenschaften (LRZ),

and The Army Research Laboratory, Aberdeen Proving Ground, MD (ARL-APG),

and HLRZ, Forschungszentrum Jülich.

Cray

Y-MP8E:

8 vector processors

Cray

Y-MP8E:

8 vector processors

(2.144 GFLOPS.)

[Y-MP8 introduced March 1991. No longer in production, replaced by the J90.]

Did somebody say OWS-E?Y-MP8s at: The National Cancer Institute (YMP8/8128),

and NOAA's Geophysical Fluid Dynamics Laboratory (YMP8/832).

Sun Microsystems HPC 6500:

30 UltraSPARC

II 336 MHz processors

Sun Microsystems HPC 6500:

30 UltraSPARC

II 336 MHz processors

(5.19 GFLOPS, 20.16 GFLOPS Peak, 17.89 Rmax GFLOPS LINPACK. SI/FP(1) ??/9.37, SI/FP(12) ??/22.6.)

This system has 16 slots in the back, one of which must be used for a CPU/RAM card and other for an I/O card. The CPU/RAM cards hold 1 or 2 CPUs and 2GB of RAM. (16 slots - 1 slot for I/O) * 2 GB RAM per board = 30 GB RAM maximum. 0.99 x 0.77 x 1.73 meters, LxWxH.

HPC 6000s at: The University of Alabama,

and National Partnership for Advanced Computational Infrastructure (UCSD NPACI).

Sun Microsystems HPC

10000: 64 UltraSPARC II

336 MHz processors

Sun Microsystems HPC

10000: 64 UltraSPARC II

336 MHz processors

(42.62 GFLOPS Peak, 34.17 MP LINPACK GFLOPS.)

It seems that this system can be set up in 4p to 24p shared-memory "nodes" with message-passing taking it up to the 64p limit. I've recently heard that they have this up to 60p shared-memory now.

Supercomputer

Systems Ltd. GigaBooster:

7 DEC Alpha

21066 233 MHz processors

Supercomputer

Systems Ltd. GigaBooster:

7 DEC Alpha

21066 233 MHz processors

(1.6 GFLOPS Peak.)

I'm not sure why I list this computer here on the supercomputer page. It breaks 1 GFLOP, sure, but then again so does a SGI Octane--a two CPU desktop graphics workstation--and I don't list that box here.Gigaboosters at: The High Performance Computing Group of the Electronics Laboratory, ETH Zurich.

Currently maintained, just barely, by pen7cmc. You can mail me at pen7cmc at yahoo dot com. I don't keep a link here because I receive an intolerable amount of junk email. I know this his horribly out of date, I'll update it sometime soon...really!

Previously this was Jonathan Hardwick's Supercomputer GIFs page.