We have a gap between 6.8min to 12.5min, which is

close to 100%(!), and everybody, who has experience with film

development knows that development results for the same film which vary

between the times

shown will be definitely NOT the same: either with 6.8min the same film

will be extremely underdeveloped or with 12.5 min (if the shorter time

is

OK) the film will be overdeveloped.

See the values, which have been determined by starting in some chilled

water. Temperature was raised by adding two batches of warm water:

Again we see poor uniformity! The last line shows the difference

between the lowest and the highest temperature. A shows 10% more than F

and G.

How can we live with such uncertainties? Easy! I've declared the

precision

mercury thermometer as the

standard thermometer,

and the

alcohol precision thermometer is my

work thermometer.

So the potential danger of the mercury is minimized (looks that vapours

are poisonous, and vapours may occur when the thermometer gets broken

), because this thermometer rests in a save wooden box in a save place.

I've glued a small table at an easy reachable location giving me the

read values of C and the 'real' values of D. As we can see from the

second table the

relative accuracy of both precision thermometers is nearly the same

(16.2

and 16.1), so they are "precise" in terms of relative accuracy, but

badly

adjusted!

Here is my way to achieve reproducible development conditions:

WORK THERMOMETER

Due to my table I know that when I want to have water of 19.2C

the scale must show 20,6C. If my work thermometer will get broken, I'll

buy a knew one and will standardize it with my 'standard' thermometer.

Give a thermometer filled with alcohol some time, at least 1 minute,

until you believe the temperature shown. Also take in account that

standard thermometers must be fully submerged to show exact

temperatures

(for me this means that the thermometer must be dipped so deep into the

liquid that the length of the alcohol column is under the liquid

level).

As a test: Have two glasses of water available, one filled with cold

tab

water, the other with warm tab water. Put the thermometer into the cold

water,

stir a little bit and wait until the temperature shown does not change

any

more. You will see, it will take some time. Now put the thermometer

into

the warm water. Temperature will raise, but see how slowly the upper

stable

value is reached, even when you stir the thermometer. It might take up

to

a minute until the temperature shown does not change any more. However:

When you see the

alcohol column reaching the upper limit of the

scale

immediately remove the thermometer from the warm water, otherwise

it

will burst! (In this case we don't have a common understanding what is

warm

and what is hot!)

A

table work thermometer -> standard temp looks like:

| Tread

from work thermo |

19.1C

|

20.1C

|

21.1C

|

22.1C

|

23.1C

|

equals Treal (accord. to

stand. thermo)

|

18.0C

|

19.0C

|

20.0C

|

21.0C

|

22.0C

|

(in this case T

read from work thermo is general

1.1C to high, but that's only due to the same linear increase). So when

I want to have 20C, I mix to read 21.1C! Luckily I just have to

add

or subtract a constant value.

In case we observe a linear behaviour of the deviation the table might

look different and we might have to plot a line.

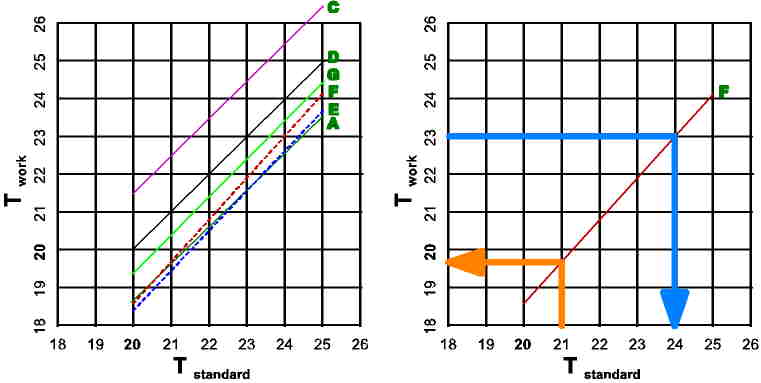

See the following charts:

The left chart shows the temperature readable on each thermometer

(except

B) versus the temperature shown on the reference thermometer D. It is

clearly

visible that G, and C (and nearly A) have the same slope as D, so the

difference

is just a constant value for all temperatures. A, E, and F (the 'new'

way

to fabricate thermometers) show different slope, which make the

correction

of the value read more complex. This can be seen an the right chart:

When

we want to adjust the temperature of something to e.g. 21C, we have to

read

from the work thermometer

F19.7C. On the other side: when we

read

from this thermometer 23C it's in reality 24C.

STANDARDIZED DEVELOPMENT TIMES

My development times are adjusted in terms of

film/developer/dilution/agitation to get an optimum contrast in the

negatives for my enlarger and I measure temperature with my work

thermometer.

DEVELOPMENT AT

20C

Due to a very efficient heat insulated development tank (will be

described in future on my pages) start is always with 20C and end after

15min is with a maximum deviation of 0,5C from starting temperature.

These prerequisites allow to control the negative development process

completely and precisely.

And

electronic thermometers?

More accurate?

No,

not at all . Most electronic thermometers are specified to show

temperatures with +/- 1C and a resolution of 0,1K. We have here the

same as with the precision thermometers above: the

relative

accuracy is OK (resolution of 0,1K), while the

absolute

accuracy is not (+/- 1C)!

Why do we have the problems?

Temperature is a physical entity which we can't see (seeing is

our sharpest sense) but only feel, which is very imprecise. The

measurement is done by watching changes of physical properties which

react on temperature changes. With traditional thermometers it is the

thermal expansion of mercury or alcohol. The amount of liquid stored in

the bulb expands very constant proportional to temperature, and the

additional volume required is found in the capillary of the

thermometer. That's why the column grows with rising temperature, and

that's why a thermometer bursts when the

liquid column reaches the upper end. No space left for expansion

crackles

everything, because liquids and solids are not compressible (compared

with

gaseous substances) and thermal expansion can raise gigantic forces.

Now it is possible to produce very precise capillary tubes for

the column of the thermometer, but the volume for the bulb MUST exactly

match a given value otherwise the thermal expansion in absolute values

is too large or too small. If the required absolute value is not

exactly

hit, a volume too large will lead to 'nervous' instruments overreacting

to temperature changes, while the contrary is leading to 'lazy'

reactions

to environment changes. All this seems to be controllable! But as we

can see from the thermometer comparison above, it is difficult.

Now with electronic thermometers we look usually at resistance

changes of a material according to temperature, or there is something

like a law of nature that a voltage drops by -2mV/K of an internal

layer

of an integrated circuit (pn-area). This means that this device has to

handle a voltage difference of 0,2mV/0.1K , but 200µV is a very

small

voltage and difficult to handle. Again, the adjustment to

absolute values

is the problem, the

relative accuracy

is usually not the problem.

In general temperature is a physical property not very easy to

be measured very accurate. However, we can believe that we can measure

temperature quite reproducible for our own process. But when we tell

a friend to develop a film at this and that temperature with that

developer

(-dilution) and this agitation he very likely will fail to get

comparable

results, because his thermometer very likely will show different

temperatures

to ours.